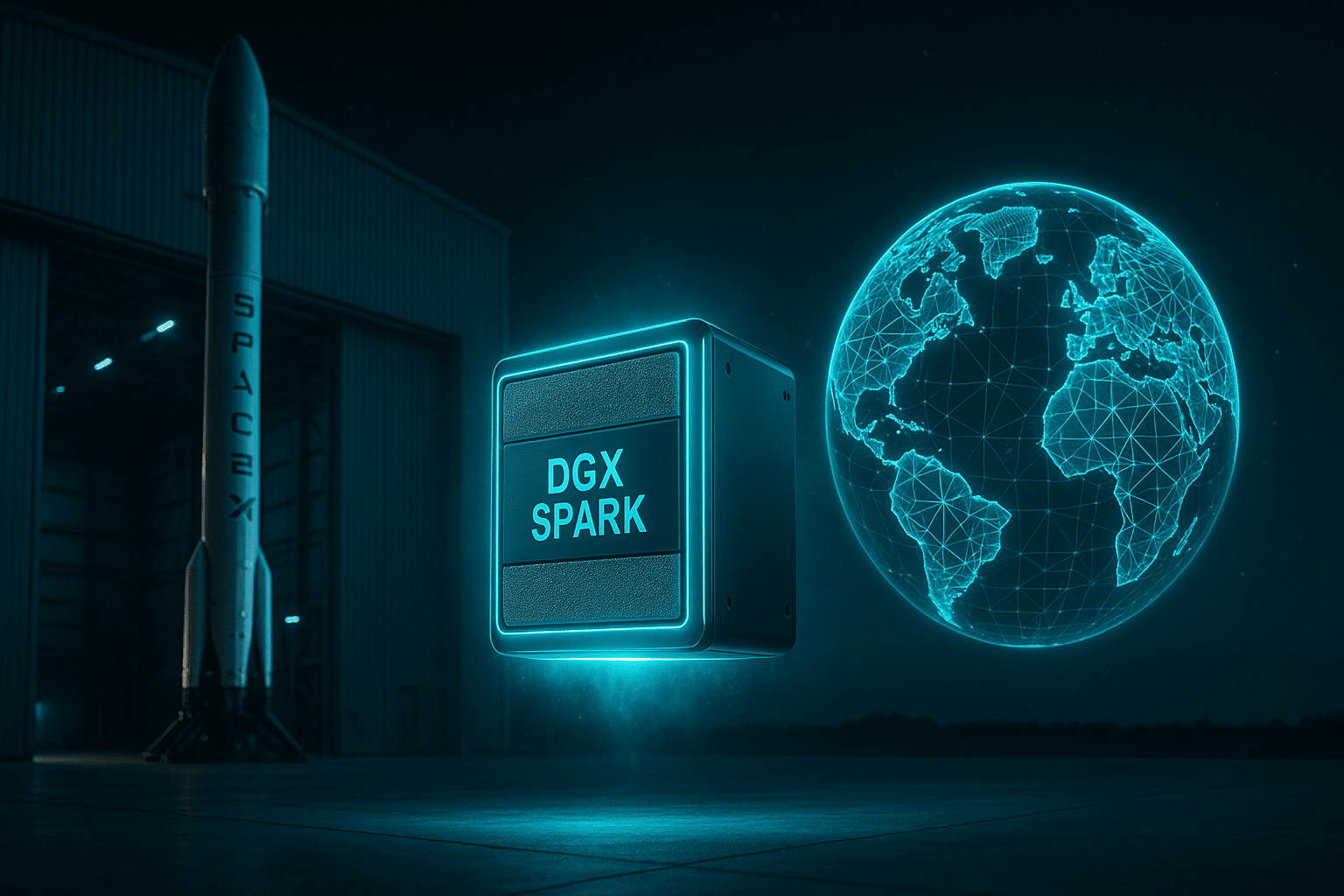

A Moment That Redefined the Future of Compute

When NVIDIA CEO Jensen Huang personally delivered a DGX Spark AI supercomputer to Elon Musk at SpaceX’s Starbase, the world witnessed more than a ceremonial hand-off.

It symbolized the moment when supercomputing left the data center and arrived at the edge.

Built on NVIDIA’s Grace Blackwell architecture, DGX Spark offers nearly 1 petaflop of AI performance within a desktop-sized footprint — a stunning compression of capability once reserved for hyperscale infrastructure.

The power that once filled racks now fits on a single developer’s workstation.

Why SpaceX — and Why Now

SpaceX runs on split-second data: rocket telemetry, autonomous docking, launch safety.

Hosting a DGX Spark locally showcases the growing need for immediate, on-site AI compute — where every millisecond counts.

This moment reflects a larger trend across industries: AI is moving closer to its data sources — whether that’s a satellite feed, a factory camera, or a vehicle sensor.

The Age of Edge Supercomputing Has Begun

For years, AI was bound to the cloud. Now, compute gravity is reversing.

Why the Edge is Winning:

-

Latency kills insight: Real-time applications can’t wait for cloud responses.

-

Privacy and sovereignty: Sensitive data stays on-site.

-

Bandwidth efficiency: Process signals locally; send only results upstream.

-

Operational cost: Continuous cloud inference is costly; edge compute scales economically.

DGX Spark embodies this philosophy: intelligence should be everywhere, not centralized.

How This Aligns with NiDA AI’s Vision

At NiDA AI, we’re engineering this same paradigm — embedding advanced AI intelligence directly at the edge.

Our R&D focus is on compact, high-performance edge nodes capable of running deep-learning inference, signal processing, and multimodal analytics in real time.

These systems are being designed for:

-

Driver and occupant monitoring

-

Smart surveillance analytics

-

Industrial safety and predictive monitoring

-

Healthcare and vital-sign analysis

Each deployment operates as a micro-AI hub, processing data locally and syncing with the cloud only when necessary — the same distributed model that DGX Spark now symbolizes on a global scale.

NiDA AI’s mission is to make every device its own intelligence center.

Mini Supercomputers, Mega Impact

The DGX Spark represents the beginning of hardware minimalism in AI infrastructure — smaller devices doing extraordinary work.

Over the next decade, we’ll see an ecosystem of interconnected micro-supercomputers forming a global AI mesh:

each node — whether a machine sensor, a retail camera, or a wearable — will run its own optimized model, communicate efficiently, and operate independently.

At NiDA AI, we’re developing the orchestration layer that makes this possible — enabling real-time edge inference, secure syncing, and cross-node collaboration across intelligent devices.

Beyond PR: A Strategic Signal

Many viewed the hand-delivery as a marketing gesture.

In reality, it marks a strategic shift — the start of a new computing era where intelligence resides at the source of data.

From aerospace to manufacturing, enterprises are re-architecting their systems to reduce latency, enhance autonomy, and gain real-time control.

The Takeaway for Enterprises

-

Build your edge layer now: waiting for cloud capacity is no longer viable.

-

Adopt modular hardware: scale from prototype to production seamlessly.

-

Prioritize inter-node intelligence: the real advantage comes from coordination among distributed AI units.

NVIDIA delivered the DGX Spark to SpaceX.

NiDA AI is delivering the spark of intelligence to the world’s edge.

Conclusion

The DGX Spark delivery isn’t just a headline — it’s a harbinger.

The future of AI belongs to distributed systems, local intelligence, and real-time decision making at the edge.

At NiDA AI, we see this moment as confirmation of our path: building a world where every device — from factory floor to frontline camera — thinks for itself.

Call to Action

If your organization is exploring Edge AI, Industrial Automation, or Real-Time Analytics,

reach out to NiDA AI — we’ll help you architect the intelligent edge that moves your business forward.