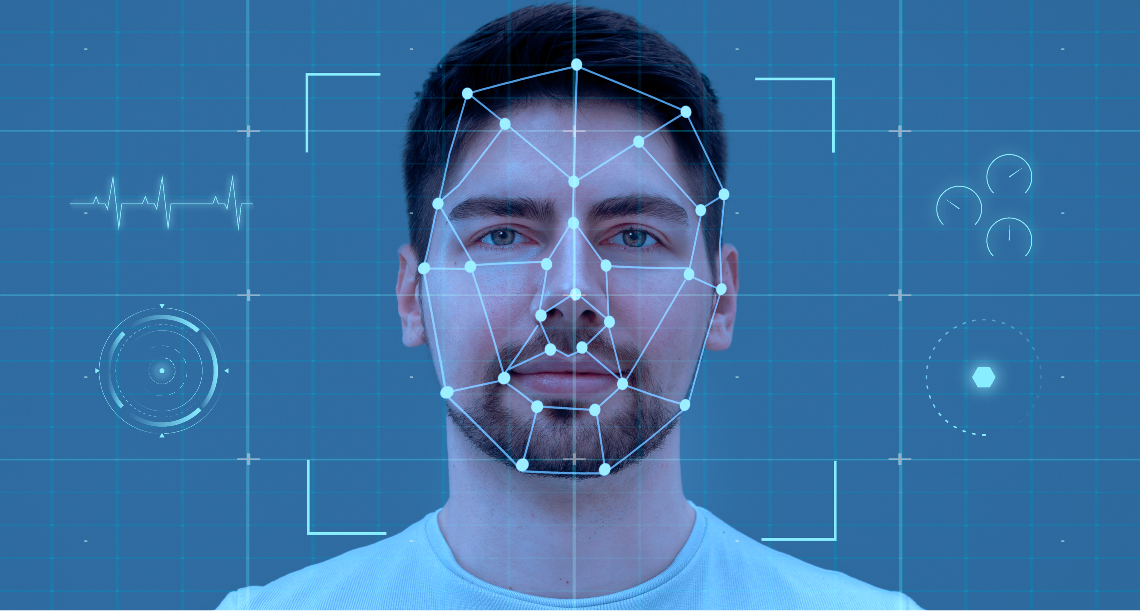

At Nida Ai, we have developed an innovative solution that enables non-contact heart rate (HR) estimation using facial video analysis, providing a convenient and unobtrusive method for monitoring vital signs.

Traditional heart rate monitoring typically relies on contact-based devices such as electrocardiographs (ECG) and contact photoplethysmography (cPPG) sensors. While effective, these methods can cause discomfort and are not always practical for continuous monitoring, especially in non-clinical settings. There is a growing need for non-invasive, user-friendly solutions that facilitate regular health monitoring without the need for physical contact.

Recent advancements have introduced remote HR estimation methods using facial videos, primarily based on remote photoplethysmography (rPPG). These approaches detect subtle color changes in the skin caused by blood flow. However, many existing methods are limited to controlled environments and struggle with factors such as head movements, varying lighting conditions, and diverse camera qualities, which can significantly affect accuracy and reliability.

At Nida Ai, we have developed an advanced non-invasive heart rate (HR) monitoring solution that utilizes AI-powered facial video analysis to estimate heart rate without requiring any physical contact. Traditional HR monitoring relies on electrocardiographs (ECG) or contact-based photoplethysmography (cPPG) sensors, which can be cumbersome and impractical for continuous use in non-clinical settings. Our solution eliminates these challenges by leveraging remote photoplethysmography (rPPG) and deep learning models, enabling accurate vital sign monitoring using just a standard RGB camera.

Our system is built on an end-to-end deep learning pipeline that processes spatial-temporal features from facial regions of interest (ROIs). It detects subtle skin color variations caused by blood flow, allowing for precise HR estimation. The convolutional neural network (CNN) extracts meaningful patterns from these signals, while a Gated Recurrent Unit (GRU) refines the predictions by modeling temporal dependencies across consecutive frames. This dual-model approach enhances robustness, making our solution effective even in challenging conditions such as varying lighting, motion artifacts, and diverse camera qualities.

Captures HR signals from multiple facial ROIs, accounting for spatial and temporal variations.

Convolutional Neural Network (CNN):Processes the spatial-temporal representations to estimate HR accurately.

Gated Recurrent Unit (GRU):Models temporal dependencies between consecutive HR measurements, improving robustness against motion and lighting changes.

Large-Scale Multi-Modal Database:Utilizes a comprehensive database containing diverse facial videos with variations in head movements, illumination, and device types to train and validate the model.

Provides a comfortable and hygienic method for HR monitoring without the need for physical sensors.

Robustness:Maintains accuracy across various conditions, including different lighting, head movements, and camera qualities.

Scalability:Suitable for widespread use in various applications, from personal health monitoring to large-scale public health initiatives.

User-Friendly:Offers an unobtrusive and convenient solution for continuous health monitoring.